To find the necessary Current applied to an 8 ohm load to produce 10,000 watts. (link to Ohms Law Pie Chart, scroll down to bottom of link) 's%20Law.htm Sqrt() = square root of number in parenthesis I think you are making this more complicated than it needs to be. I can't find any good way of calculating an amplifiers instantaneous peak, and because its looked at as a useless number, it seems to be difficult to find. However I have no idea if thats correct or the right way of looking at it. I mean, thats 750,000 watts per millisecond then. If an outlet can produce a maximum of say 3000 jouls, and we reduce this to 1 ms, then I do start to see how an amplifier could potentially produce some insane numbers, if it could utilize that much energy.

I couldn't find any goo d charts, just an article that said 2 jouls equals 500 j/ms. Now I understand that a peak impulse could last less time yet, and the only thing I could find was that a single milisecond would multiply that times 250. 14,400 watts for 1 second equals 14,400 jouls. Thats 240 squared divided by 8 ohms is 7,200 watts, or 14,400 watts rms.

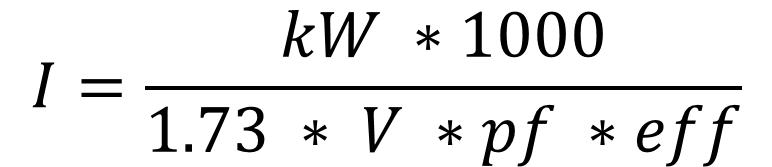

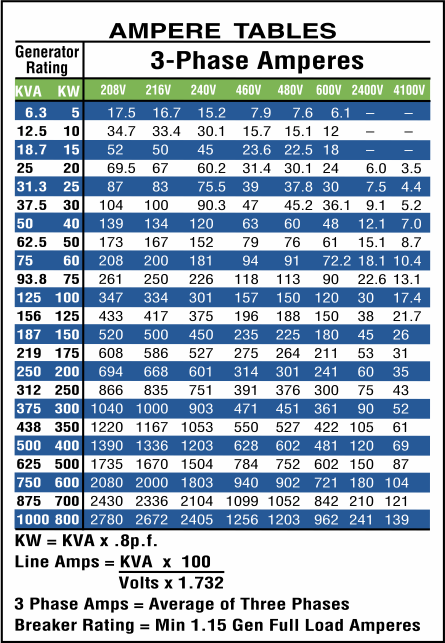

Using the formula on Wikipedia for peak power, no idea if this is a correct formula, it says, take the peak to peak voltage, then find the peak amplitude it can drive, I assumed it was half the peak to peak, square then, then divide by the impedance, and multiply by the efficiency rating.ġ20 volt rails X 2, 240 volts P-P, bridged as you put it is then 480, but then divided in half for peak amplitude. I would even question if some of the amps he is mentioning have enough output devices to support that. It seems like physics alone dictates that 10kw from a normal household outlet is next to impossible. This method may be too inaccurate or too slow to get an accurate reading, but doing this, I've never even seen 100 amps through the A/C lines when it does trip a breaker. Now this probably is a very flawed test, but I have used an AC Inductive current measuring meter from Fluke to measure the amount of current that is drawn at startup before of many of my amplifiers. His amp uses a switchmode supply, now those don't ever seem to have much reserve capacitance, so am I right to think that the outlet would need to supply most of the current? Wouldn't even a brief peek at 150-200 amps be enough to not only trip the breaker but potentially melt the insulation on 14 guage Romex? Last amplifier I used rated at even 5,000 watts RMS used 240 lines because of the current problems. More current at 100 volts, which is probably closer to the rails of such an amp, but I don't know. I'm curious, because he is now saying he tested his amp to 18,000 watts and is running more than one of them, is that even possible for peaks? I mean, it would require over 150 amps of current at 117 volts.

I said that, first, I did it because I like to DIY, second, I don't like proaudio amplifiers because of the fans, and third, I doupt highly he was getting 10,000 watts from his amplifiers. He was argueing that I wasted my money and should have purchased a used QSC or Crest amplifier, becuase I could have had 10,000 watts for 500 dollars, or whatever. I was talking to someone on another forum about my amp project. I dont want or need this, its a curiosity question.

0 kommentar(er)

0 kommentar(er)